👁️ The Face That Wasn’t Real

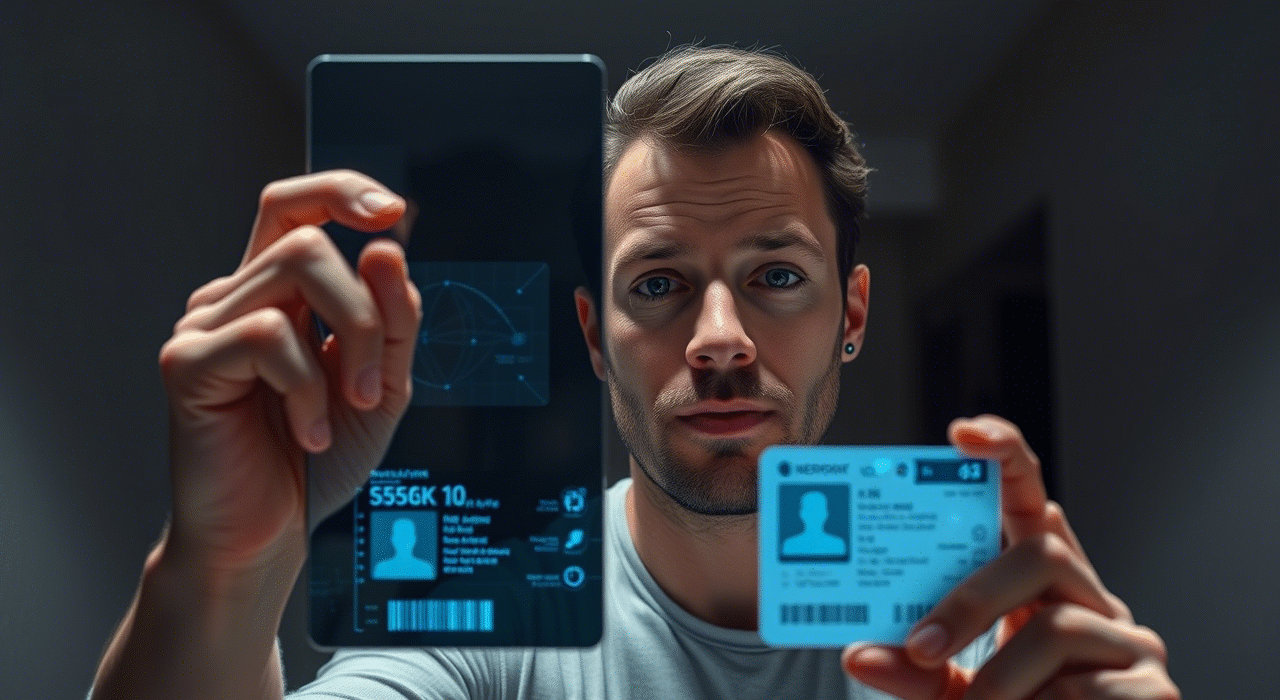

In February 2025, a Gibraltar-based online casino operator received what looked like a routine account registration: a 32-year-old Finnish player submitting their ID and a live selfie video as part of the KYC process. The ID passed OCR checks. The selfie video matched the face. The player even engaged in a few live chats with the support team.

Except—none of it was real.

The person in the video never existed. The ID was a synthetically generated Finnish passport. The “selfie” was a deepfake, created using AI-driven facial mapping tools trained on public Instagram images. By the time the fraud team caught the anomaly, the operator had already processed €8,700 in winnings withdrawals to a crypto wallet.

This wasn’t an isolated case. This was just the beginning.

🧠 What Is Deepfake ID Fraud?

Deepfake ID fraud is a new breed of cyberattack where bad actors use AI-generated images, videos, or even voices to bypass identity verification processes—particularly in remote onboarding systems like those used by online gambling platforms.

“We’re seeing a convergence of technologies—AI, synthetic media, and identity theft—all being weaponized,” says a senior compliance officer at a tier-1 sportsbook.

Where traditional fake IDs used Photoshop or physical forgeries, today’s fraudsters are leveraging deep learning models like StyleGAN, FaceSwap, and ElevenLabs Voice AI to forge:

- Photo IDs that never existed

- Realistic live selfie videos

- AI-generated voice calls to support

And in many cases, these fakes are good enough to fool even high-end verification tools.

📈 Why Gambling Is a Prime Target

Online gambling platforms have become ground zero for deepfake abuse for several reasons:

🎯 1. KYC at Scale

Operators process thousands of new users per day, especially during major sports events. This scale makes it impossible for manual reviewers to catch every anomaly.

💸 2. Fast Money Flow

Unlike traditional banks, iGaming platforms often process withdrawals within minutes or hours, allowing fraudsters to extract funds before red flags are raised.

🪙 3. Crypto-Friendly Onboarding

Sites that allow crypto deposits and withdrawals are especially vulnerable, as attackers can avoid traditional banking scrutiny.

🌍 4. Jurisdictional Loopholes

Regulatory requirements vary wildly across regions—what passes in Curaçao might never pass in Malta or the UKGC.

🔍 How the Scams Work: 3 Popular Methods

⚠️ 1. Full Synthetic Identity

- Fake name, fake ID, deepfake selfie

- Often created using AI image generators and ID template kits

- Used to register as a new user, deposit, meet minimal play, and withdraw

“Some of these identities are Frankenstein creations—real birthdates, fake faces, mixed countries,” says a fraud detection manager from a UKGC-licensed platform.

⚠️ 2. Real ID, Faked Owner

- Stolen real ID (via dark web leaks)

- Deepfake selfie or video of someone impersonating the ID holder

- Targets high-value accounts with known VIP perks

⚠️ 3. Voice Deepfakes in Support Calls

- Attackers use AI voice synthesis to spoof customers on calls

- Attempt account takeover or reset withdrawal credentials

One operator flagged 37 support calls in 2024 where the voice matched existing VIP users—but with tiny vocal anomalies (longer pauses, robotic cadence) that hinted at AI synthesis.

🔐 KYC & AML Vendors Are Scrambling

Even top-tier ID verification vendors—such as Jumio, Onfido, Veriff, and IDnow—have admitted that deepfakes present a major new threat vector.

⚙️ Existing Tools May Not Be Enough

- Liveness detection can be spoofed with video injection attacks

- Face match scores can be fooled with GAN-generated faces

- Manual reviews are slow, expensive, and often miss subtle AI anomalies

🔬 The Detection Arms Race

🧪 How Are Operators Fighting Back?

- Passive Liveness Checks

Analyzing micro-expressions, eyelid movement, and depth data during selfie video captures. More difficult for deepfakes to mimic. - 3D Face Mapping

Depth-sensing cameras and 3D geometry validation can expose flat video layers used in fakes. - AI-on-AI Detection

Vendors like Sensity AI and Deepware Scanner now offer tools to detect synthetic artifacts invisible to the human eye. - Behavioral Biometrics

Analyzing user behavior—typing speed, mouse movement, session duration—can flag synthetic users who behave too “perfectly.”

📉 Real-World Impact: The 2025 Numbers

According to a joint report by iGaming Watchdog and Europol:

- Over 19 operators faced deepfake-related fraud attempts in Q1 2025.

- Estimated losses: €34 million, primarily in bonus abuse and VIP scams.

- Average fraud ring size: 3–6 identities per group, many automated.

- 70% of successful frauds involved crypto wallets or Asian payment gateways.

👨⚖️ Regulatory Reactions: Not Fast Enough?

While Tier 1 regulators like the UKGC, MGA, and Danish Gambling Authority have begun updating KYC expectations, there’s little industry-wide clarity on:

- What constitutes “deepfake fraud” legally?

- Who’s liable when synthetic IDs pass automated verification?

- What penalties apply if operators don’t catch them?

Some regulators are now urging operators to adopt “multi-layered KYC” combining document, biometric, and behavioral verification.

But in most cases, enforcement is still reactive, not proactive.

🤖 The Rise of Fraud-as-a-Service (FaaS)

Deepfake fraud is no longer just the domain of elite hackers.

Telegram and dark web markets now openly advertise:

- Custom deepfake ID bundles: €250–€600

- Video selfie generators: €1,200 lifetime access

- Voice AI spoof calls: €50 per minute with user prompts

“It’s now plug-and-play fraud,” says a cybercrime analyst. “Anyone with $500 and a Telegram account can start gaming casinos.”

🛑 What Operators Should Be Doing—Now

🔐 1. Upgrade to Multi-Modal ID Checks

No single method is foolproof. Combine:

- Document scan

- Selfie video with passive liveness

- Behavior & device fingerprinting

🔎 2. Red Flag Anomalies

Watch for:

- Overly smooth skin in selfies

- No blinking or unnatural eye motion

- Consistent device/browser mismatch

📉 3. Monitor First-Time Withdrawal Behavior

High deposit + minimal play + fast withdrawal? Flag for manual review and enhanced re-verification.

🧠 4. Staff Education

Train compliance and fraud teams to recognize deepfake traits—even in voice calls and videos.

🤝 5. Collaborate with Vendors and Competitors

Share threat intelligence and fraud patterns across operators—especially in the same jurisdiction.

🧠 Final Thoughts

Deepfake ID fraud isn’t science fiction anymore—it’s today’s challenge. In a high-speed industry where speed and convenience often outrun caution, operators that don’t adapt will bleed cash, trust, and licenses.

It’s no longer enough to verify that a player is a real person. You need to verify they’re the right person—and that they’re real right now.